|

Hi everyone! I'm a senior research scientist at Google DeepMind. Prior to this, I completed my PhD at UC Berkeley, where I was fortunate to be advised by Professor Pieter Abbeel. Email / CV / Google Scholar |

|

|

My current research is focused on world models for control. |

|

Hao Liu*, Wilson Yan*, Matei Zaharia, Pieter Abbeel In Submission project page tweet A vision-language model trained on million-length sequences of images, language, and video. |

|

Wilson Yan, Andrew Brown, Pieter Abbeel, Rohit Girdhar, Samaneh Azadi In Submission project page tweet A motion-conditioned image animation approach to video editing that enabled a diverse range of edit types |

|

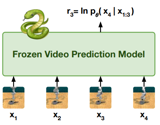

Alejandro Escontrela*, Ademi Adeniji*, Wilson Yan*, Ajay Jain, Xue Bin Peng, Ken Goldberg, Youngwoon Lee, Danijar Hafner, Pieter Abbeel NeurIPS 2023 project page tweet We show that video prediction likelihoods can provide more dense reward signal for learning reinforcement learning agents |

|

Hao Liu, Wilson Yan, Pieter Abbeel NeurIPS 2023 tweet We learn an aligned text-image token representation without text-image data, that can then be used for downstream VQA and classification tasks using in-context learning with an LLM |

|

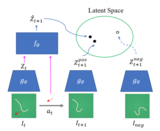

Wilson Yan, Danijar Hafner, Stephen James Pieter Abbeel, ICML 2023 project page tweet A vector-quantized latent dynamics video prediction model that learns compressed representations to efficiently condition on long videos of hundreds of frames during both training and generation |

|

Wilson Yan, Ryo Okumura, Stephen James Pieter Abbeel, project page An video generation architecture that leverages an object-centric transformer design for more scalable and efficient video generation |

|

Wilson Yan*, Yunzhi Zhang*, Pieter Abbeel, Aravind Srinivas project page An efficient and scalable video generation architecture that first learns a VQ-VAE to compress video data, and then learns a GPT-style transformer to model discrete latent codes |

|

Wilson Yan, Ashwin Vangipuram, Pieter Abbeel, Lerrel Pinto CoRL 2020 project page blog post Using contrastive methods to learn plannable representations for manipulating deformable objects such as rope and cloth |

|

Wilson Yan, Jonathan Ho, Pieter Abbeel, Preprint Performing image interpolation and semantic manipulation (e.g. hair color, smiling, gender) with a PixelCN on facial images using Fisher scores as an embedding space |

|

Yilin Wu, Wilson Yan, Thanard Kurutach, Lerrel Pinto, Pieter Abbeel, RSS 2020 project page blog post Sim-to-Real transfer to learning a cloth-spreading policy on a real robot with any human demonstrations |

|

|

|

CS 188: Introduction to Artificial Intelligence Undergraduate Student Instructor, CS188 Fall 2018 Undergraduate Student Instructor, CS188 Spring 2019 Head Undergraduate Student Instructor, CS188 Fall 2019 CS 294-158: Deep Unsupervised Learning Undergraduate Student Instructor, CS294-158 Spring 2020 |